likePredict: A product to predict "likes" of an Instagram Post

Posted by Kyle Gallatin

Updated: Mar 30, 2017

Instagram “likes” Impose a Problem.

An internal Instagram study showed that teens delete up to half of all their Instagram posts due them not receiving enough likes. In fact, Instagram’s new “Instagram story” is in part an effort to counter the vanity imposed by the “likes” metric - Business Insider.  As a result, there is a growing market need for a tool that can accurately predict the likes of a post for a given user. While there are a number of services that provide basic to complex analytics, there is a lack of predictive modeling being employed for the average user - hence we developed “likePredict”: A “like” predictor for use in public Instagram accounts.

As a result, there is a growing market need for a tool that can accurately predict the likes of a post for a given user. While there are a number of services that provide basic to complex analytics, there is a lack of predictive modeling being employed for the average user - hence we developed “likePredict”: A “like” predictor for use in public Instagram accounts.

Web Scraping Instagram Posts

Gathering the data for this project was the largest challenge. In recent years, Instagram has evoked an increasingly stringent API policy, requiring developers to undergo a number of processes before being given a key. As such, we needed to scrape the data.

Instagram, like many other large organizations, has many built in tools to limit and trap web scrapers from getting too much data. Alternatively, there are a number of Instagram web viewers that display instagram content without the same regulations. For our project we used two sources: Instagim and Instaliga.

Instagim - Posts by Tag

Instagim allows photos to be displayed by tag on a single page, displaying username, likes, comments, caption, filter and some relevant hashtags. Due to the scope and timing of this project, we focused solely on photos under the “nature” tag.  While Instagim can constantly be refreshed to show the latest photos, we needed photos that had been posted for at least 24 hours to accumulate likes. When clicking the “load more” button at the bottom of the page, we noticed that the website made an ajax call with a unique key. By scraping solely these keys and waiting 24 hours, we could replicate the same ajax call from the previous day and obtain photos posted the day prior (we did our best to keep the 24 hour period constant). Using beautifulsoup, urllib and the requests library in Python, we were easily able to download each photo and get the metrics specific to it.

While Instagim can constantly be refreshed to show the latest photos, we needed photos that had been posted for at least 24 hours to accumulate likes. When clicking the “load more” button at the bottom of the page, we noticed that the website made an ajax call with a unique key. By scraping solely these keys and waiting 24 hours, we could replicate the same ajax call from the previous day and obtain photos posted the day prior (we did our best to keep the 24 hour period constant). Using beautifulsoup, urllib and the requests library in Python, we were easily able to download each photo and get the metrics specific to it.

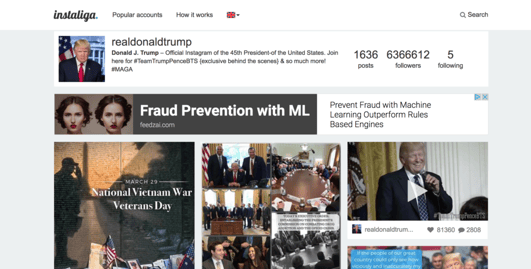

Instaliga - User Information

While we had the photo and the likes it got, we were still missing a key metric: followers. We needed to find a website that could be easily crawled for followers and following, since they would be important features in predicting the amount of likes for a photo. Instaliga was easy to scrape since it allowed us to append usernames to the end of a URL, and then crawl for this information using scrapy.  Additionally, we were able to scrape the metrics for the last 20 posts from a given user, giving us a baseline for their standard level of engagement with their audience. However, since each user is it’s own URL, the script generated too many requests, often being met with server errors. To auto throttle scrapy’s wait time turned out not to be time efficient, so we moved forward by hammering their server with requests and getting what data we could.

Additionally, we were able to scrape the metrics for the last 20 posts from a given user, giving us a baseline for their standard level of engagement with their audience. However, since each user is it’s own URL, the script generated too many requests, often being met with server errors. To auto throttle scrapy’s wait time turned out not to be time efficient, so we moved forward by hammering their server with requests and getting what data we could.

EDA & Feature Engineering

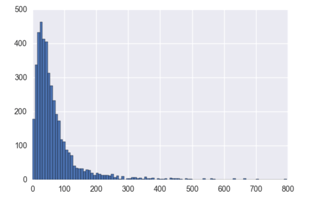

We started off on some basic EDA. The graph on the left here is a histogram of "likes" across all of our observations. As can be seen, they are not normally distributed. Followers, following and other metrics seemed to follow the same pattern. We debated doing some basic transformations (logarithmic or box-cox), however, since our final model was likely to be a neural network or tree based model, we deemed it unnecessary.

We started off on some basic EDA. The graph on the left here is a histogram of "likes" across all of our observations. As can be seen, they are not normally distributed. Followers, following and other metrics seemed to follow the same pattern. We debated doing some basic transformations (logarithmic or box-cox), however, since our final model was likely to be a neural network or tree based model, we deemed it unnecessary.

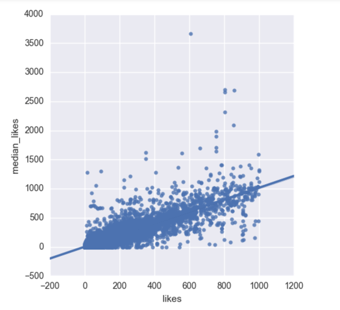

The other non-photo features were relatively easy and straightforward. The bulk of our engineering was generating mean, mode, min and max likes for their previous posts. As intuition would suggest, all of these metrics were fairly correlated with the likes received on their newest post, unless of course other extraneous factors were at work (sudden influx of followers, particularly "likeable" post such as a celebrity post, etc...).

The other non-photo features were relatively easy and straightforward. The bulk of our engineering was generating mean, mode, min and max likes for their previous posts. As intuition would suggest, all of these metrics were fairly correlated with the likes received on their newest post, unless of course other extraneous factors were at work (sudden influx of followers, particularly "likeable" post such as a celebrity post, etc...).

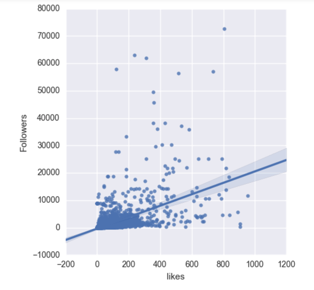

However, many other features has more complex relationships. It seems fair to assume that followers would be correlated with likes, but although there is a relationship present it is subject to a large amount of variance. These fluctuations are likely to be accounted for by a number of other factors, hence our interest in the "previous likes" metrics.

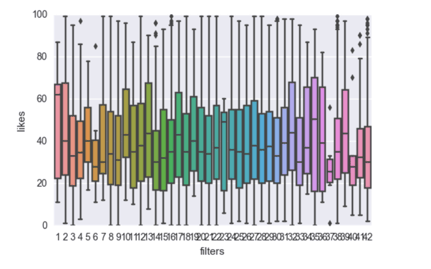

Some features such as the filter applied to the photo seemed to have even less of an effect than originally thought. These features would likely have larger bias in subsets of photo type: for example selfie photos may commonly use a filter to make a person’s skin look healthier. However, for our “nature” photos there seemed to be little correlation.

Photo Features - Extraction

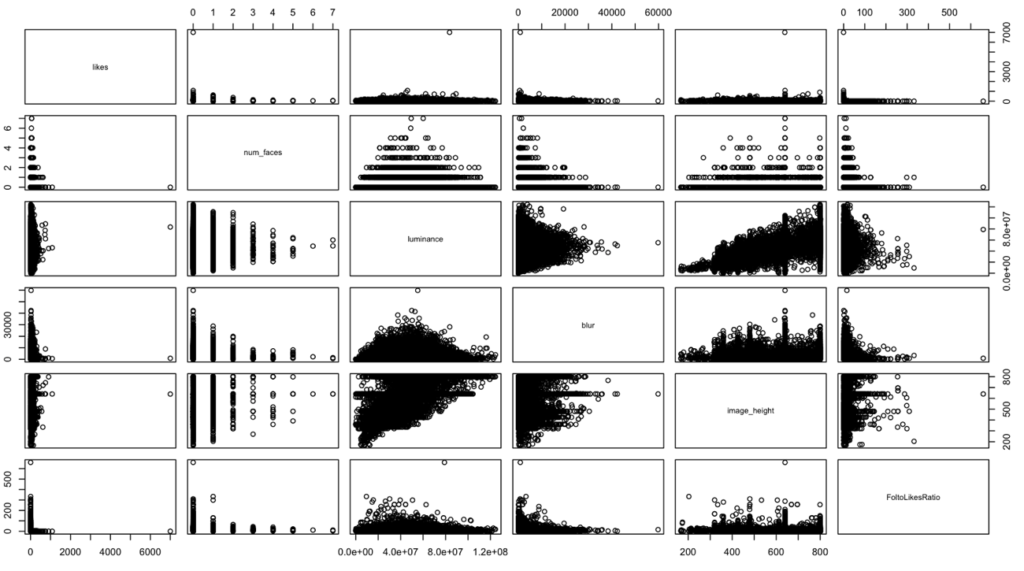

Our plan was to supplement all of the user data with information from the photos to achieve a more accurate prediction. The logic follows that if a user’s followers and general account popularity defines a range within a photo should fall, the features in that photo will aid in assigning a more precise prediction for “likes”. After turning the photos into arrays, the PIL library was used to to extract summary statistics for each color band. Other features such as luminance were easy to calculate given this data.

However, we also wanted to extract more complex features. Using the OpenCV library, we were able to use a pre trained model for facial recognition, and assign a number relative to the number of faces in each photo. We also measured the blur of each photo

Photo Features - EDA

Unfortunately, few of these photo features seemed key in finding a correlation for likes in our subset of data. We compared many of the features against likes, and likes/followers ratio to normalize likes, but the correlations still seemed somewhat weak.

In the future, we plan to extract even more features, and scrape data from multiple accounts to see if photo features matter more in the realm of a single user. Comparing some of these photo attributes against the mean and median likes for users may also have been beneficial.

Model Tuning and Selection

Neural Network

We began the modeling process by constructing a basic Multi-Layer Perceptron with a single layer of input nodes and a single output node - using the Rectified Linear Unit as our activation function. After setting up this basic model using the Keras API with TensorFlow backend, the hyper-parameter tuning was initiated. Having tuned the model extensively, other models were then constructed for comparison.

Gradient Boosting Regressor

Given the results of the Multi-Layer Perceptron, we sought to compare with less complex model. Utilizing the GradientBoostingRegressor in sklearn, we were able to obtain much better results in our cross validation processes and predictions on our test data.

Ultimately we were able to compare our predicted results to the actual amount of likes a post received in our test set and determined that 95% of the predictions were within 30 likes.

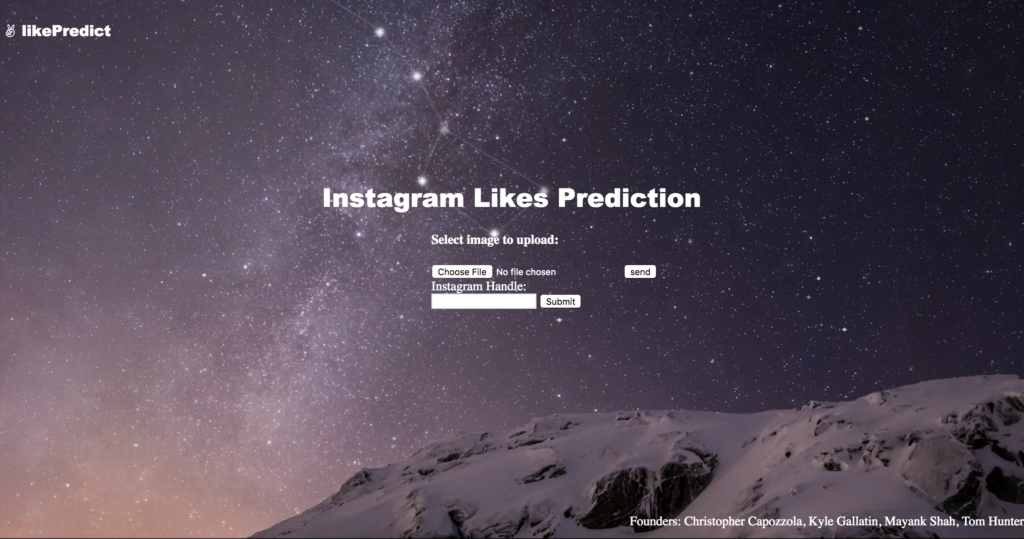

likePredict: Flask app for predicting the likes of a post

Finally, we created a front end application using Flask. This library lets you easily link backend python code with html templates to build interactive web apps. Once launched, this would allow users a simple interface to upload their image and type in their Instagram handle to receive predictions. It’s important to note that we are not web designers, so the temporary UX/UI leaves something to be desired.

Upon image upload, the back end model collects, analyzes, and exports a data frame. Upon handle input, the scrapy automatically collects the previous 20 posts and other relevant data.

These data frames are combined to match our models training columns. The model is then applied to the resulting data frame and an output prediction is generated.

Future Directions

In the future, we’d like to get access to the Instagram API since a scrapy based web application is not a model for stability and scalability. It would alleviate the majority of issues currently present in our process, and allow us to expand our models for different categories of photos. Currently, we are limited exclusively to public accounts due to data access. Additional features such as time of the week, follower involvement/network analysis, and a greater variety of image analysis would further reduce the error on our prediction within a given subset of photos. With the right amount of data and computational power, this applied model could solve some inherent issues with Instagram’s “likes” metric, and even expand to other platforms.

Kyle Gallatin

Kyle Gallatin graduated from Quinnipiac University with a biology degree in 2015. Following, he continued on for his Master's in Molecular and Cellular Biology, received in 2016. Cultivating high level skills in data science through his analytical work on pharmaceutical data, he is now seeking new data scientist and machine learning opportunities.

View all articlesTopics from this blog: API R NYC neural network Machine Learning python prediction APIs Capstone Scrapy Flask