Featured Talk: #1 Kaggle Data Scientist Owen Zhang

Posted by manager

Updated: Jan 30, 2015

Many thanks to Jared Hatch from ThoughtWorks for sponsoring us the wonderful space.

Many thanks to Owen Zhang for giving us A GREAT TALK! It was certainly full of joy and satisfaction!

(Photo: As a tradition of the meetup, we voted for the outcome of the meetup. Thumbs are up in the air means " we are super happy and satisfied"!)

===========================================

Original event announcement: www.meetup.com/nyc-open-data-events/219370251/

Speaker:

Owen Zhang is the Chief Product Officer at DataRobot. Owen spent most of his career in the Property & Casualty insurance industry. Most recently Owen served as Vice President of Modeling in the newly formed AIG Science team. After spending several years in IT building transactional systems for commercial insurance, Owen discovered his passion in Machine Learning and started building insurance underwriting, pricing, and claims models. Owen has a Masters degree in Electrical Engineering from the University of Toronto and a Bachelors degree from University of Science and Technology of China.

Topic: No. 1 Kaggler Owen Zhang will share a few tips on modeling competitions!!

This is an opportunity to learn the tips, tricks, and techniques Owen employs in building world-class predictive analytic solutions.

He has competed in and won several high profile challenges, and is currently ranked No. 1 out of a community of over 240,000 data scientists on Kaggle.

Video Recording:

Desktop Recording:

Slides:

The following transcript is kindly contributed by NYC Data Science Academy Bootcamp Students from Feb-April, 2015 Cohort, including Andrew Nicolas, James Hedges, Alex Alder, Sundar Manoharan, Malcom Hess, Punam Katariya, Korrigan Clark, Sylvie Lardeux, Philip Moy, Julian Asano, Jiayi (Jason) Liu, Tim Schmeier.

Special thanks go to James Hedges and Alex Alder for managing this transcription project with their classmates.

(Photo: Owen Zhang stayed after the meetup and answered more questions from the audience.)

Featured Talk: #1 Kaggle Data Scientist Owen Zhang

Thank you very much for coming and thanks Vivian, for the generous introduction. I’m sorry to say this, but it sounds much more impressive than it actually is. I want to tell you a little bit of background. I used to work in the insurance industry. I worked several years in Travelers and several years at AIG. So one thing that I learned from my corporate career is that it’s very very important to manage your expectations. We didn’t do a very good job, so I’m trying right now. So I’m going to give about one hour of talk. We’ll cover a few topics and I’ll cover a few topics including my personal take on how to do well in Kaggle competitions. If you have any questions, please wait until the end. We have about a half hour of open Q&A session so everybody will have a chance to ask questions.

Today, I’m going to cover these few topics. First, I’m going to give a brief introduction on how data science competitions are structured, at least how Kaggle does it. I’m going to cover a few pointers on how I do it: philosophy, strategy and also techniques. Then I will have two example competitions, so two that did I relatively well. I just want to use that example to show what I actually do and to show that each competition is quite different. The last I want to cover is the things that we learn from a competition are they useful in the real world or not. I do want to answer that question at the end because actually I get asked that question quite often.

Structure of a data science competition

How is a data science competition structured? At least, how is a supervised learning competition structured? Usually, the host will have all of the data and then they will split the data into three pieces. There’s the training piece, which we can all download, and we have both the predictors and the target. And then there is the public leaderboard piece. We havetraining for all three pieces, the difference is what we know about the target. So we know all the targets exactly for training. Then, we get a summary metric on the target for the public leaderboard.

After you build a model on the training data, you apply the model to the public leaderboard data and make predictions. Then you upload the predictions. Because the host, has [the third piece on] the server, they have all the target variables, they compare your predictions with the actuals to see how well your prediction predicts it. If I tell you summary score, that’s where the public leaderboard is. Throughout the competition, it will last a few months, you will get constant feedback of how well your model performs on that piece of data compared to all the others. So you can get some form of idea if you’re doing the right thing or not. So at the end of the competition, what really determines the outcome is the private leaderboard. So you’ll have some sort of idea if you’re doing the right thing or not.

But at the end of the competition, what really determines the outcome is actually the outcome is actually the private leaderboard ranking. Which you only get to see once. That’s after the deadline passes then they will switch over from the public to the private. It depends on the competition. It’s more often than not [the public and private leaderboards] are the same. Sometimes the public leaderboard and private leaderboard are very different. So there’s quite a bit of uncertainty involved.

Why is the competition structured that way? Because this forces us to do what the predictive model is supposed to do: that is to predict what we haven't seen before. So the private leaderboard matters most because we have absolutely no knowledge about the target variables in the private leaderboard data. That is how our model would be used in the real world. So whenever we build a supervised learning model, the purpose is to use the model to predict something we haven't known yet. So if we know something already there is no reason to predict it, we can just take a look at it. So, it is very important to evaluate the model on data that the model does not have knowledge of.

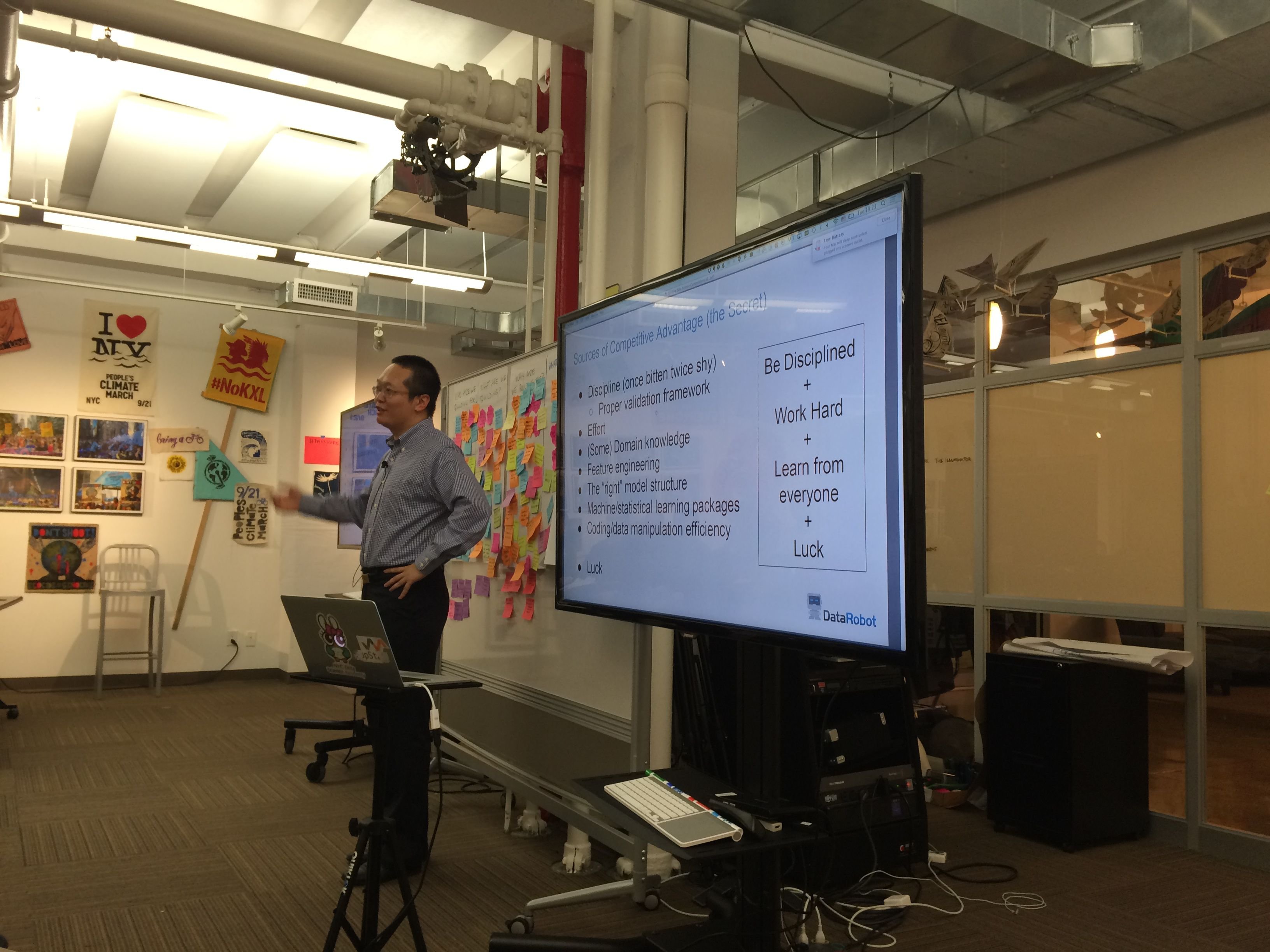

(Photo: Owen is sharing his secret why he could win many Kaggle competitions.)

Philosophies and strategies

So a little philosophy: I am actually quite fond of philosophy because when it comes time to predict something you have no knowledge of, there is usually nothing to go by so you have to fall back on philosophy.

So, I'm sure that most of you have certain data science background, and we all know that one of the biggest pitfalls in building a model is overfitting. Overfitting means that you have a training data set and you build a model on the training data set, you can throw a lot of factors at it and try all kind of different things. You can build a model that fits the training data set very well, however, the model may not generalize. So you can have a model that does exceptionally on the training data set, but if you build a model that predicts very well on things you already know, but it doesn't predict well on things you don't, then that would be a model that is overfitting.

In this case, in a lot of Kaggle competitions, overfitting is the biggest risk. So, what I learned through the years is that there are many ways to overfit. The easiest way to overfit, I think we all know, is to have too many parameters in your model. So if you have one hundred data points in your data set and you have one million predictors in there, you can probably predict it exactly, because you have so many degrees of freedom. But that is only the most common way of overfitting. There are actually many other ways.

One very subtle way of overfitting is that you keep building different models and you test each model against the answer, which is your public leader board, and then you you see we have a model works well, you keep it and whenever it doesn't work well, then you can drop it. You can keep doing this. Actually, in a lot of situations, you can keep doing this and you will find that your model's performance on the public leaderboard just keeps growing. And there's no end to it. But the reality is that you are just overfitting the public leaderboard. I think that happens quite often.

In statistics (ok, I'm not a statistician, so let me know if there is a statistician in the audience), but in statistics we always use a five percent significance test. So if something is given a null hypothesis, you have less than a five percent chance of observing what you have observed, then you have to say that this is significant. But we run multiple comparisons very very often when doing models, its better if you try very hard. You randomly try one hundred things, then a few of them might turn out to be statistically significant, but that is because you tried so many things, so here the philosophy is "think more, try less."

(Photo: Owen is answering questions from the audience.)

Four secrets to success

This is the is the secrets slide. These are the secrets. The longer version is on the left and the shorter is on the right. The slides will be posted on Vivian's website or on Slideshare, so there's no need to take notes on the slides' content.

The short version is that (1) you need to be disciplined. The temptation to overfit public leaderboard is very strong. Sometimes, psychologically, I cannot control myself, just to overfit the public leaderboard, because that makes me feel good. We are all human. We need to be disciplined so we don't look embarrassed after the private leaderboard comes out, and we had been doing so well on the public leaderboard, then it flips and you say 'ahhh', you can't take pride in yourself anymore. It doesn't look good.

The next is to work hard. The Kaggle system rewards effort. You never lose anything by participating. So you can rank absolutely last in a contest, and you still, relatively speaking, do better than not participating. Participating more, gets you better results. Even within competition, trying to work hard, gets you better. We will talk on that, how to properly work hard, later.

The next one is learn from everyone. This also is a little bit against human nature. We all have our own pride, right? Occasionally, I would think, ok this time I just try to do everything myself, I don’t want to look how other people do it. There is a little bit of, like, hubris in there. But on the other hand, people other than you, always know better than you individually, right? Because there so many of them, there is only one you, so it’s pretty hard to compete against everybody. I always feel that really learning from others that you can really improve. There is really no pride in doing everything by yourself, otherwise. Actually, I’m not sure how many people can really invent all the mathematics that learned in elementary school. And I think that takes more than one lifetime to invent all that. So there is really no “oh I learned this myself.”

The next one is luck. So we are predicting very noisy data, and things we haven’t seen yet. And there is really no absolute to differentiator between noise and the signal. There is none. So the only way to really differentiate them is that you have more data. Which if data science competitions, there is none. You have everyone’s data, there is a signal to noise ration that’s pretty determined. So there is always nonzero luck. So if you participate in something and you don’t do very well in a particular competition, that’s probably just bad luck, but if you do well that’s all your effort. [Audience Chuckles]

I always get reminded, before I go to give a presentation to make sure that I bring something that’s concrete, that people can take away. The rest of the presentation is something that’s more concrete. But I personally feel, it’s how you approach the problem. The philosophy and strategy are more important than specific techniques. So I don’t want to say, just learn all the techniques and you’ll do well. So a lot of times it’s not about what you do, there are a lot of specific things you know is necessary to build a good model. The key there is actually how to allocate your effort. We all have a time budget. There is a deadline and then we have to do our day job and feed our kids, if you have kids. Turns out there is limited number of hours per day. It’s actually worth it to think about how much you want to allocate to doing feature engineering, how much time you want to allocate into trying different forms of models, and how much time you want to allocate into hyperparameter tuning. So actually, I do think about that consciously.

(Photo: Owen is explaining the technical tricks to the audience.)

Technical tricks of the trade

Now to talk about some technical tricks. Gradient Boosting Machines. I use GBM on everything that I can use GBM on. There are things that you can’t GBM. One example would be very large data. If you have 100 million observations, you probably don’t want to write GBM. But for anything that can use GBM, GBM. I probably overuse GBM. So why is GBM so good? GBM automatically captures, to a very large degree, nonlinear transformations and subtle and deep interactions in your features. GBM also treats missing values gracefully.

I use the R implementation of GBM, that is what I work with so often. There are at least four very good GBM implementation today. Two years ago there was only R that was good. But nowadays you can use either the R package, you can use scikit-learn package, its very good. And if you want go parallel there is H2o package and xgboost. They all are very good implementations. Each has their own quirks. But they are all able to do nonlinear transformation interactions.

Those two things are what people spend most of their time on, when we’re building linear models or generalized linear models. In the days when we had logistic regression or when we had only Poisson regression in the industry, in the insurance like we were. Whenever we are building models we are not really building models because we always run the same models, like Gamma regression. So what we are doing is looking at all the outliers, all the transformations, turning it to U-shapes, W-shaped, hockey stick shaped, whatever shaped. If you use GBM, it will take care of this for you, automatically thats why I use it so much. If you haven’t tried it please do try it.

Tuning parameters

GBM like all the other modern machine learning algorithms has a few tuning parameters. When your data set is small, you can just do a grid search. But actually GBM has three separate pretty orthogonal tuning parameters. If you want to do grid search, you actually do need a pretty big range. I usually use some rule-of-thumb kind of tuning, so it’s mostly for saving time. So if you do a very smart grid search, you can probably do better than the rule-of-thumb. But rule of thumb is very time saving.

The first set of tuning parameters is how many trees you want to build and the learning rate. They are reciprocal to each other. A Higher learning rate will get you to use fewer trees. I want to save time, right? Usually I just target 500-1000 and trees and tune the learning rate. The next one is just a common tuning parameter for decision trees (GBM is based on decision trees). The number of observations in the leaf node. You can look at the data, and get feel of how many observation you’ll need to get mean estimate. So if your data is very noisy then you make more and if the data is stable you probably need less. Interaction depth is a very interesting for the R version of the GBM. The R version of interaction depth describe how many splits it does; so, it’s not interaction depth as in the tree depth. If you have interaction depth of 11, that means you have above 10 leaf notes. It doesn’t mean that you will have a 1000 leaf nodes. Don’t be afraid to use 10; I use 10 very often.

High cardinality features

There is one thing that GBM does not do--actually, there is one thing that all the tree-based algorithms do not do well, which is dealing with higher cardinality categorical features. So, if you have feature, which has a categorical variable that has many many levels, throwing them into any tree based is basically a bad idea because the tree will either run super slowly if you are encoding all of them or just overuse that feature because it’s overfitted. So for high cardinality features--if you want to use them in the tree--you need to somehow encode them as numerical features.

There are few different ways of encoding. High cardinality features are very common. We often see things like zip code, or in medical data you see diagnosis code (there are tens of thousands of ICD9’s), or text features. One approach for encoding them is to build ridge regression. Starting a logistic regression is very simple, you can add regression based on your categorical features and then use prediction itself that will be numerical as an input to your GBM.

Stacking

That’s what people usually call stacking. Basically, you build a stack of different models–subsequent stages of models will use the previous stages of models’ output as their input. As I described, if you have text features or numerical features, you get all the text features into your ridge regression model, then you make a prediction. Then you put all your predictions side by side with all of your raw numerical features, and fit this in a GBM. And this usually works pretty well. If you haven’t tried it, you can replace these by a categorical features. And for a lot of Kaggle competitions, you just do this and you reach the top 25%. So people that run bottom half of kaggle probably aren’t using GBM. [Audience Chuckles] Because it is quite easy to rank in the top half. There are many beginners that really get into this on the bottom.

The one particular risk here is that if you use the same data to build the first stage model and then use the same data again on Model 2, very often the prediction from Model 1 will be overfitting in Model 2, because you already used the targeted variable in Prediction 1. Prediction 1 has a leakage in there. Basically it has leaked the information from the actual target. Whenever you put the Prediction 1 like that with numerical features, Prediction 1 will be given too much weight. The more you overfit Model 1 the more weight will be given to Prediction 1. So it can be quite bad. What you want to do here is use different data for Model 1 and Model 2. So you can split your data in half. Use half of the data for Model 1 and half for Model 2. This will give you a better model than using all the data for Model 1 and all the data for Model 2. You can swap them you can split the data half for A half for B, using all of the data in this way. This will usually give good results. If you’re not trying to win competitions, i.e. for practical purposes, I feel that this kind of model is already good enough for a lot of practical applications.

You can take this one step further if you have smaller data, where even half and half might be limiting your data too much, you can do something like cross-validation–you can split your data ten-fold and do 10 different Model 1’s, each one using nine to predict the other ones. You can then concatenate the models together so that each record will have an out-of-sample prediction from Model 1. Then you can avoid the overfitting problem in Model 2. So, that’s how you deal with categorical features.

Feature engineering

Once you can deal with the categorical features, GBM is good for 95% of general predictive modeling cases. You can make a GBM model a little bit better (i.e. for marginally boosting Kaggle ranking-- it may or may not be worth it for you). The reason is, GBM can only approximate interactions and non-linear transformations. So when GBM is approximating the transformations or interactions, it can’t differentiate noise versus signal, so inevitably you pick up noise. So if you know there are strong interactions, it’s better that you explicitly code them into the GBM. GBM’s feature engineering is just a greedy space search, so that it can’t really find very specific transformations. So, for example, a lot of times when we build a sales forecasting model, the best sales forecaster is actually the previous time period’s sales. If you want a GBM to automatically discover that, I think, actually, that’s pretty difficult, so you should code that, definitely

The second most often used tool by me is linear models, like generalized linear models, or regularized generalized linear models. I used to use glmnet very often, it’s also a very popular R package. So from a methodology perspective, glmnet is actually, all the generalized models are kind of opposite of a GBM. So all the generalized linear models are global models assuming everything is linear, and all the tree models are local models assuming everything is staircase shaped. So they compliment each other very well.

So linear models become quite important when your data is very very small, or when your data is very very big, for different reasons. When your data is very very small, you just don’t have enough signal to support a nonlinear relationships for interactions. It’s not that there isn’t; there always are nonlinear relationships in interactions. But if you don’t have enough data to detect them it’s better to stick with a simpler one, so that’s when the linear model works well. On the other end of the spectrum, and you really have billions and billions of observations, only linear models are fast enough for you, so everything else will never finish in our lifetime, so that’s why go back to linear models when the data is very large. So the linear models, when you do a model blend (GBM and glmnet complement each other well), you get a very nice boost in performance. The downside of glmnet or anything similar to this kind of model, is that it requires a lot of work. All the things GBM does for me automatically, I have to deal with them myself–I have to deal with all the missing values, all the outliers, all the transformations and interactions. It really takes a lot of time.

And the last thing I want to cover is regularization. My personal bias is this: In this day and age, if you are building linear models without any regularization, you must be really special. Always regularize. It’s required. There are two very popular regularization approaches, one is L1, one is L2, so basically L1 give you sparse models, L2 just makes everything’s parameter a little bit smaller. The sparsity assumption is a very good assumption. The book Elements of Statistical Learning describes why that is the case. If your problem is sparse in nature, assuming sparsity will get you a much better model than not assuming it. If your problem is not sparse in nature, then assuming it will not hurt that much. So the lesson is, always assume sparsity. Unless its a problem you know is not sparse.

So there are actually problems that we know is not sparse, like text mining, or if you’re doing zip code. If you think ‘what is sparse?,’ like there are three zip codes that are special, they have some kind of parameters and everything else is the same. That’s the same thing in text mining, if you assume that 500 words is very special and all the other 500 thousand are not special. So in that case, when you’re doing text mining, or doing high cardinality categoricals, that’s where you do not assume sparsity. But other than that it’s simple, assume sparsity.

First, I admit that I’m not a very good text miner, if that’s a word. I Google things and I read whatever other people write and try to follow their examples. My approach to text mining is the simpler stuff usually works better. As far as we have come in Machine Learning, the N-gram- and Naive Bayes-based approaches actually work surprisingly well, and a lot of times, actually, it’s quite hard to do better. Here on text mining, my view is make sure you get the basics. The basics, such as N-grams, and also the trivial features, such as how long the text is, how many words are in the text, and if there is any punctuation, those kinds of features are actually very important.

The last one is: a lot of problems that are heavy on text are not necessarily driven by text. This year’s KDD Cup is an interesting example. The KDD Cup, it’s a problem, it’s actually sponsored by a New York-based company. They have a website where you can donate to your local school’s teacher’s projects. So if you’re a teacher in an elementary school or a high school, you can post credits say “I”m doing this reading club with my students, I need 100 dollars to buy this carpet.” People really host those! If you sympathize with that effort you will say I’ll give you five dollars, I’ll give you ten people can add together, doner will chose. They setup the competition to see which project is more likely to get attention from people and more likely to be funded.

Teachers will put up essays and summaries of their projects. So it’s very nicely written—some people write very nice essays, not everybody. All text data are provided, also with the cost and type of project. Whenever you have unstructured data, the text always is way bigger than the structured data, so that I always feel that I’m obligated to use the text a lot. But it turns out that usually some other features are much more important. For example, projects that cost less are much more likely to be funded. That doesn’t need the essay to tell you. If you ask people for one thousand dollars for a laptop, no essay will save you. So, it’s much easier to ask people for math books. That’s what the data tells us.

Blending

If you do a competition on Kaggle, then you will learn this. It’s almost never that a single model will win a competition. People always build a variety of models and blend them. Blending is just a fancy word for a weighted average of models. You can do a little bit fancier than weighted average, but not that much. I usually just use weighted average. My rationalization for why blending works, is because all of our models are wrong. As George Box said, “All models are wrong, but some are useful.” I would like to think all my models are wrong, but mine useful. “It’s very hard to make predictions, especially about the future.”

When we study regression analysis, or something similar, in school, we always start or models with Independent Identical Distribution (IID) Gaussian errors. In my entire life, I have never seen a real data set with that distribution. It never happens. I don’t know where it should happen, but it never happens. So our assumptions are never right. So whatever you assume, unless you’re doing a simulated data set, everything is wrong. But the hope is that our models are wrong in different ways, so that when you average them, their wrongness kind of cancels each other’s out.

There are a few things to keep in mind. One is that simpler is usually better when things are uncertain. If you are not sure, just add Model 1, Model 2, Model 3 and divide by three. That’s usually better than taking the parameters, unless you have a lot of data. The next one is a very easy and useable strategy which is to intentionally overfit the public leaderboard. And if you do it carefully it might give you a nice boost. So what you do with this, you build many different models and you submit them to the public leaderboard individually and then, based on their score, you keep the better ones and then average them, and that’s your blend. So based on the public leaderboard that might give you a nice boost. This model will work better than any individual model submitted. So either that will work better on the private leaderboard or not depend on how much data you have on the public leaderboard. If you have a lot of data on the public leaderboard, this will help you because when you’re doing this you are actually implicitly using the public leaderboard data as your training data. So you actually have the advantage over people who don’t do this. A real advantage, if the public leaderboard data is large enough. But if it’s not large enough you will do terribly bad.

One thing that’s very useful when doing blending is that you are not going after the strongest individual model. You always want a model that works but you want a model that’s different. Sometimes I will intentionally build a weak model. They help a lot when you add them to a strong model. So the key is the diversity—I’m sure HR people will be very happy to hear this, we need diversity in model building. This is true, it’s actually scientifically proven it’s good.

Building (and blending) diverse models

There are many ways to build diverse models. You can use different tools. You use different model structures. You can use different subsets of features. You can use different subsampled observations. You can build a model so it’s weighted. You can build both weighted and unweighted numbers. Usually the unweighted one will work worse. But if you can take 90% of the weighted one and a bit of the unweighted one it will work better than just the weighted. But here is try and build those models more or less blindly; try not to look at the answer. You know the public leaderboard is always there and you always want to test them. Try not to use that as a guide to filter out the models unless you are confident that the dataset is large enough. It’s a total judgement call based on the noise and the size of the data.

Let me try to address this question objectively, although it’s not possible—obviously I like competitions. But, sometimes people ask me, “other than being a fun game, is there anything really useful from competitions?” First of all, let’s acknowledge that it’s a fun game. The fun game itself is entertaining so we shouldn’t dismiss a fun game as something not useful. But beyond that, there are two ways of looking at this.

The model in a competition only covers an actually very small portion of the necessary work to make the data science very useful in this work. So to make the model really useful, we need at least another three pieces. One is that we need to select the right problem to solve. So if you find a problem that’s interesting only abstractly, but there is no real-world application, there is no immediate value. The next one is we need to have good data. The model is certainly a garbage in, garbage out problem. If you don’t have good data you cannot expect to have good models. The third one is we need to make sure the models are used the right way. A lot of the time it’s possible to build very good models, but then they are implemented wrong. It happened to me in the past; you have a good pricing model in insurance and when they swap the parameters like x1 parameter goes to x2. And then you figure out, this doesn’t work, and then say. “oh they swapped it.” And I never asked why they swapped it, but they do. With all these three, plus the right generalizable model and then you’ll have the right solution. So, the fun part of building a model in Kaggle is only a small part, we all need to keep that in mind in reality.

But aside from that, a competition helps us in very many ways. So I have two ways it helps the sponsor. Iif you have a company, either a start-up company or enterprise. Building a computation for your own data set is very helpful. There are two ways this is very helpful. One is to measure to a degree the signal versus noise in the data so if you put up reasonable prize money needs you can request 99% valid signal and that will be squeezed out by other people on Kaggle. Kaggle people are quite good at that. The second way is [finding out] if there are any flaws in your data so a lot of times it is because of the data collection process issues. You may have predictors that are not real predictors. Such as if a particular field is missing, you always have a yes or no as an answer. So if you have anything like that the Kaggle crowd will find it for you so you can go back to fix it. The model is not useful if you have that problem but at least you can fix it.

But as a participant, I learned two major things on Kaggle, one is that I have to build a generalizable mode—just predicting what I know is useless. That discipline is a hard discipline to learn. The next one is to fully realize day to day that there are other approaches and there are other people with better ideas. So this always keeps me on my toes and learning new things. Otherwise, it’s really easy for me to sit in my corner and think “Oh, I do really nice modelling work.”

Case study I: Amazon

So let me just give two examples of two competitions I did okay in. One is Amazon’s user access competition. This is one of the most popular competitions. It used to be the most popular competition, but recently the Higgs competition has more participants. This one has about 1700 teams participating. I got second place on this one. This is one of the most interesting experience for me. I was the no. 1 in the public leaderboard. But this time I didn’t try to overfit the public leaderboard. Sometimes I do, but this time I didn’t. I still lost somehow to another team. Never figured out why.

The [challenge was] to use anonymized features to predict if employee access request would be granted or denied. If you work, you know for a large company, this is quite often; if I need access that folder, someone needs to say ‘yes’ or ‘no.’ And all the features are categorical: resource IDs, manager IDs, user IDs, and department IDs. Many features have many many levels. But I wanted to use GBM, right. This is how I converted all the categorical data into numerical. There are two ways I used to encode categorical features into numerical. One is how many that levels appear in the data. You can do this for all your categorical features and all the interactions for categorical features. And another one is using the average response—the average y for that level, as a predictor. So here you have to do something slightly more complex, than doing a straight average because a straight average will lead to overfitting on certain levels.

Beyond those encodings, the final model is a linear combination of three different kinds of trees, plus the glmnet and other features, plus two subset features base trees. So this is a blend that I tuned manually. At that time, I did not fully understand the online learners like a Vowpal Wabbit. So I didn’t use it. In retrospect, if I had used them, I would have derived a better model because they have a very different algorithm. This competition had a particular requirement that everybody had to publish their code. So my code is up there on the Github. Do not evaluate my software engineer skills when you read the code.

So here is something that is very easy to do actually for encoding categorical feature by doing the mean response. This is a very simple data set, we have a categorical feature, the UserID, And for the level A1 we have six observations. Four of them are in the training data, and two of them are in the test data. For the training data you have the response variable then 0 1 1 0, and in the test data you wouldn’t have the response variable. So here, I show how to encode this into a numerical. So what you do it to calculate, for the training data, the average response for everything but that observation. So for the first one, it’s 0. For this particular observation there are 3 other observations in the same level, there’s number 2 3 4. And there’s two out of three (that’s why it’s 0.667). The second one, it also has 3 other observations, but it’s 1 0 0 (so it’s 0.333). Do not use itself. If you use it itself, then you will be overfitting the same data.

Sometimes it also helps to add random noise on to your training set data. It helps you smooth over very frequently-occurring values. For example, if you do have this, you will see that [these numbers can be thrown] into GBM, GBM goes nuts because it treats them as special values. So if you add small noise on top of that, it actually makes it a little more real from a data perspective. You do not need any such special treatment for the testing data. Testing data is a straight average of the response value for that level, for the training. So two out of four (that’s 0.5). So the basic thing I need to do to use categorical features in GBM. This is much easier to do compared to building a separate ridge regression, and I do this very, very often. That’s Amazon. The amazon competition is a very simple data set. Mostly do feature engineerings on anonymized categorical features. And the response is 1 or 0.

Case study II: Allstate

So the Allstate competition is unique in a different way in that it has very structured target variables. So it has seven correlated targets, so you have A B C D E F G which represent the options people choose when people buy personal auto insurance policies from Allstate. So you can choose, like you want comprehensive coverage, etc. So this turned out to be a very difficult competition for two reasons. Actually, a lot of people hate this competition a lot. One [reason is that] the evaluation criteria is all or nothing. So you have to predict all seven correctly to get a point. If you predict anything wrong it’s zero. So basically partial answers get no credit, for this one. We had a long debate on the Kaggle forum but the Kaggle people didn’t budge. I heard this story even Allstate was upset with us so even the sponsor wasn’t happy with us.

So the [challenge] is to predict the final purchase option based on earlier transactions. But whichever option they choose for their last known transaction is actually a very good predictor for the final purchase option. So the model is that if you just use the trivial “last quoted” as the predictor, no change. It’s right about 53.269%. You really need a lot of decimals to see the difference, that’s why they’re decimals. And then I got the third place in the competition—I was able to improve that by 0.444% and then another one’s solution improved that by 0.03%. I’m proud to say that this is statistically significantly better. I don’t know if in business it is significantly better, but statistically speaking, it’s significant.

The challenge to this is actually mostly on the targeted variable side, so that A B C D E F G are not independent of each other, actually, every one of them can be predicted very well. More than 90% accurate. But if you put them together they have actually very interesting structures. And then the two challenges are to capture the correlation and not lose the baseline. So I build chained models for the dependency. So I first build a freestanding F model, then assuming you know F, you build a G model, etc. And you can actually change the order of models to put your free models first and your independent models later. And this works quite well. This is a little bit more theoretically appealing because it’s a very systematic model. So the next one is, so as not to lose the baseline, we build two-stage models. We build one model to decide: either use the baseline or the prediction. And then you want to use your prediction, then use your prediction, otherwise stick with the baseline. So at least for the dummy cases, you do as well as the baseline, and for where you can find improvement, you improve it. So I think that’s how you can improve upon very strong baseline.

Closing remarks

To finish, there are kind of a few trivially useful pointers. If you really want to learn how to build generalized models, participate in the competition. Just watching it doesn’t count. Form my own perspective, the more frustrated I am doing it, the more I learn after. You really get a good learning experience that way. If you’re just observing as a spectator, you really don’t get into the problems. They also post links to papers, to books. I need to admit that I have no PhD, my education was in engineering, related but neither in statistics or computer science. So I learned a lot just reading books and Kaggle forums. One book that really helped was Elements of Statistical Learning. It’s highly recommended. It’s like the bible of machine learning. It’s also freely available—legitimately—on the internet. Thank you.

(Photo: Founder of NYC Data Science Academy, Vivian S. Zhang and Advisor of NYC Data Science Academy, Owen Zhang took a photo after the event.)

(Photo: Founder of NYC Data Science Academy, Vivian S. Zhang and Advisor of NYC Data Science Academy, Owen Zhang took a photo after the event.)

manager

View all articlesSubscribe Here

Posts by Tag

- Meetup (101)

- data science (68)

- Community (60)

- R (48)

- Alumni (46)

- NYC (43)

- Data Science News and Sharing (41)

- nyc data science academy (38)

- python (32)

- alumni story (28)

- data (28)

- Featured (14)

- Machine Learning (14)

- data science bootcamp (14)

- Big Data (13)

- NYC Open Data (12)

- statistics (11)

- visualization (11)

- Hadoop (10)

- hiring partner events (10)

- D3.js (9)

- Data Scientist (9)

- NYCDSA (8)

- Web Scraping (8)

- Career (7)

- Data Scientist Jobs (6)

- Data Visualization (6)

- Hiring (6)

- Open Data (6)

- R Workshop (6)

- APIs (5)

- Alumni Spotlight (5)

- Best Bootcamp (5)

- Best Data Science 2019 (5)

- Best Data Science Bootcamp (5)

- Data Science Academy (5)

- Demo Day (5)

- Job Placement (5)

- NYCDSA Alumni (5)

- Tableau (5)

- alumni interview (5)

- API (4)

- Career Education (4)

- Deep Learning (4)

- Get Hired (4)

- Kaggle (4)

- NYC Data Science (4)

- Networking (4)

- Student Works (4)

- employer networking (4)

- prediction (4)

- Data Analyst (3)

- Job (3)

- Maps (3)

- New Courses (3)

- Python Workshop (3)

- R Shiny (3)

- Shiny (3)

- Top Data Science Bootcamp (3)

- bootcamp (3)

- recommendation (3)

- 2019 (2)

- Alumnus (2)

- Book-Signing (2)

- Bootcamp Alumni (2)

- Bootcamp Prep (2)

- Capstone (2)

- Career Day (2)

- Data Science Reviews (2)

- Data science jobs (2)

- Discount (2)

- Events (2)

- Full Stack Data Scientist (2)

- Hiring Partners (2)

- Industry Experts (2)

- Jobs (2)

- Online Bootcamp (2)

- Spark (2)

- Testimonial (2)

- citibike (2)

- clustering (2)

- jp morgan chase (2)

- pandas (2)

- python machine learning (2)

- remote data science bootcamp (2)

- #trainwithnycdsa (1)

- ACCET (1)

- AWS (1)

- Accreditation (1)

- Alex Baransky (1)

- Alumni Reviews (1)

- Application (1)

- Best Data Science Bootcamp 2020 (1)

- Best Data Science Bootcamp 2021 (1)

- Best Ranked (1)

- Book Launch (1)

- Bundles (1)

- California (1)

- Cancer Research (1)

- Coding (1)

- Complete Guide To Become A Data Scientist (1)

- Course Demo (1)

- Course Report (1)

- Finance (1)

- Financial Data Science (1)

- First Step to Become Data Scientist (1)

- How To Learn Data Science From Scratch (1)

- Instructor Interview (1)

- Jon Krohn (1)

- Lead Data Scienctist (1)

- Lead Data Scientist (1)

- Medical Research (1)

- Meet the team (1)

- Neural networks (1)

- Online (1)

- Part-time (1)

- Portfolio Development (1)

- Prework (1)

- Programming (1)

- PwC (1)

- R Programming (1)

- R language (1)

- Ranking (1)

- Remote (1)

- Selenium (1)

- Skills Needed (1)

- Special (1)

- Special Summer (1)

- Sports (1)

- Student Interview (1)

- Student Showcase (1)

- Switchup (1)

- TensorFlow (1)

- Weekend Course (1)

- What to expect (1)

- artist (1)

- bootcamp experience (1)

- data scientist career (1)

- dplyr (1)

- interview (1)

- linear regression (1)

- nlp (1)

- painter (1)

- python web scraping (1)

- python webscraping (1)

- regression (1)

- team (1)

- twitter (1)