Crawler with Selenium

Posted by Darien Bouzakis

Updated: Jan 13, 2021

Introduction

The crawler is comprised of several different components to make the unstructured data accessible for cleaning. As the data we are looking to scrap here is financial in nature, we take on several webpages to comprise and give the data structure. First, the structure is given with two feats, its endpoints or URLs and the writing of features for further analysis. Both processes we will describe in full to explain with further visualizations.

Unstructured

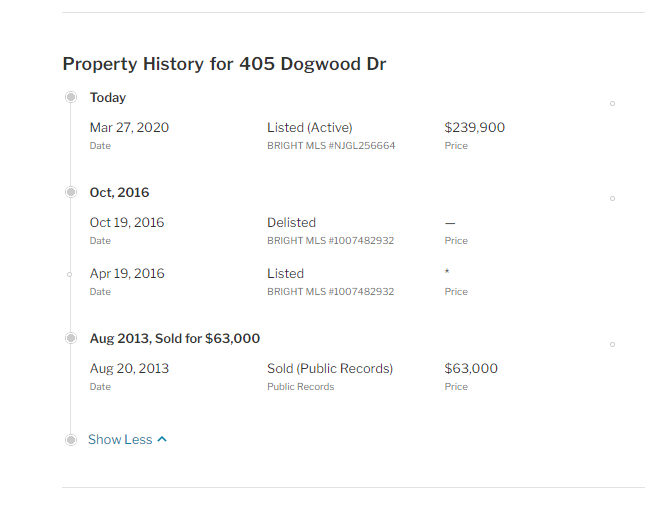

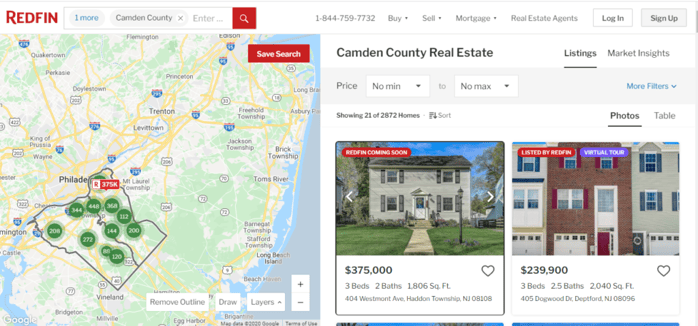

The first set of data takes on a relative amount of information pertaining to each URL from a home's designated webpage. These URLs are scrapped and written to commas separated valued that can be read in by a separate function. Through Google Chrome's driver the initial arguments were passed through a list. While these strings are not the complete endpoints, we reformat each in the designated list to iterate through the various needed websites.

Considering the site has JavaScript embedded into at least its user interface (UI) then Selenium was the chosen tool after attempting with Beautiful Soup and the less cumbersome tools. The file must run through a shell to test an XPath's endpoint. Other, or sometimes older, programming interface can be seen to work depending on how a website has been built. Regardless, trail and error must be done and printing checks were made for both exception and county pages. To describe further, each button at the end of each page was used to retrieve a detailed more detailed endpoint. This endpoint was the feature that is to be read in the following script.

1. Endpoint - https://www.redfin.com/county/1894/NJ/Camden-County/filter/mr=5:1898

2. Endpoint - https://www.redfin.com/NJ/Deptford-Township/405-Dogwood-Dr-08096/home/105270038

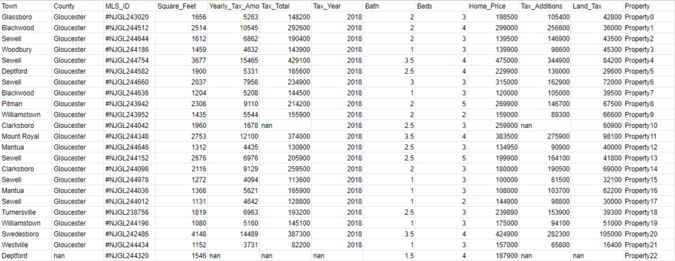

Structure

As each home's endpoint was retrieved, its main scrapping occurred through the 'detail_page.py '. The divisions on the page that were taken off the HTML are from Town, County, MLSID, Square feet, Table_Appreciation, Bath, Beds, Table_Event, Home Price, Table_Date, Land Value, Table _Source, Table_Price, Tax Year, Tax Amount, Tax Additional, and Tax Total. While these columns have been made and also cleaned of frivolous characters, each value has went through some level of a preprocessing. This cleaning has mainly taken place through regular expressions.

The structure of the file is rather simple but each predictor or independent feature can be quite cumbersome. Through each expression the files must be reran in order to see if its output removed character strings or formatted the numerical values. It is also not short of if the file is ran enough times, the bot will be flagged for security parameters.

Conclusion

Further analysis and exploration should done through this information. Prior to this, feature transformation must be done for null values and other potential bugs that may arise. This and other mapping may also be done has the exploration occurs. It is a powerful tool that if advised may revolve more or around other scrapping tools.

Github: Scrapper

Darien

Darien Bouzakis

View all articlesTopics from this blog: python Web Scraping APIs Data Science News and Sharing

Subscribe Here

Posts by Tag

- Meetup (101)

- data science (68)

- Community (60)

- R (48)

- Alumni (46)

- NYC (43)

- Data Science News and Sharing (41)

- nyc data science academy (38)

- python (32)

- alumni story (28)

- data (28)

- Featured (14)

- Machine Learning (14)

- data science bootcamp (14)

- Big Data (13)

- NYC Open Data (12)

- statistics (11)

- visualization (11)

- Hadoop (10)

- hiring partner events (10)

- D3.js (9)

- Data Scientist (9)

- NYCDSA (8)

- Web Scraping (8)

- Career (7)

- Data Scientist Jobs (6)

- Data Visualization (6)

- Hiring (6)

- Open Data (6)

- R Workshop (6)

- APIs (5)

- Alumni Spotlight (5)

- Best Bootcamp (5)

- Best Data Science 2019 (5)

- Best Data Science Bootcamp (5)

- Data Science Academy (5)

- Demo Day (5)

- Job Placement (5)

- NYCDSA Alumni (5)

- Tableau (5)

- alumni interview (5)

- API (4)

- Career Education (4)

- Deep Learning (4)

- Get Hired (4)

- Kaggle (4)

- NYC Data Science (4)

- Networking (4)

- Student Works (4)

- employer networking (4)

- prediction (4)

- Data Analyst (3)

- Job (3)

- Maps (3)

- New Courses (3)

- Python Workshop (3)

- R Shiny (3)

- Shiny (3)

- Top Data Science Bootcamp (3)

- bootcamp (3)

- recommendation (3)

- 2019 (2)

- Alumnus (2)

- Book-Signing (2)

- Bootcamp Alumni (2)

- Bootcamp Prep (2)

- Capstone (2)

- Career Day (2)

- Data Science Reviews (2)

- Data science jobs (2)

- Discount (2)

- Events (2)

- Full Stack Data Scientist (2)

- Hiring Partners (2)

- Industry Experts (2)

- Jobs (2)

- Online Bootcamp (2)

- Spark (2)

- Testimonial (2)

- citibike (2)

- clustering (2)

- jp morgan chase (2)

- pandas (2)

- python machine learning (2)

- remote data science bootcamp (2)

- #trainwithnycdsa (1)

- ACCET (1)

- AWS (1)

- Accreditation (1)

- Alex Baransky (1)

- Alumni Reviews (1)

- Application (1)

- Best Data Science Bootcamp 2020 (1)

- Best Data Science Bootcamp 2021 (1)

- Best Ranked (1)

- Book Launch (1)

- Bundles (1)

- California (1)

- Cancer Research (1)

- Coding (1)

- Complete Guide To Become A Data Scientist (1)

- Course Demo (1)

- Course Report (1)

- Finance (1)

- Financial Data Science (1)

- First Step to Become Data Scientist (1)

- How To Learn Data Science From Scratch (1)

- Instructor Interview (1)

- Jon Krohn (1)

- Lead Data Scienctist (1)

- Lead Data Scientist (1)

- Medical Research (1)

- Meet the team (1)

- Neural networks (1)

- Online (1)

- Part-time (1)

- Portfolio Development (1)

- Prework (1)

- Programming (1)

- PwC (1)

- R Programming (1)

- R language (1)

- Ranking (1)

- Remote (1)

- Selenium (1)

- Skills Needed (1)

- Special (1)

- Special Summer (1)

- Sports (1)

- Student Interview (1)

- Student Showcase (1)

- Switchup (1)

- TensorFlow (1)

- Weekend Course (1)

- What to expect (1)

- artist (1)

- bootcamp experience (1)

- data scientist career (1)

- dplyr (1)

- interview (1)

- linear regression (1)

- nlp (1)

- painter (1)

- python web scraping (1)

- python webscraping (1)

- regression (1)

- team (1)

- twitter (1)